Infrastructure as code increases productivity and transparency. By storing the architecture configuration in version control, changes can be compared to the previous state, and the history gets visible and traceable. Terraform is an open source command line tool which codifies APIs into declarative configuration files. In this tutorial, Terraform is used to deploy Grafana to OpenShift, including the creation of a service, an external route, the deployment configuration, and persistent volumes.

Prerequisites

Terraform and OpenShift CLI

Before we start, download the Terraform binary. Also download the OpenShift command line tool (CLI). For this example, I am using version Terraform v0.11.11 and oc v3.11. Additionally, both binaries are also provided in the Github repository for this tutorial located in the “bin” directory.

The Terraform command line interface (CLI) evaluates and applies Terraform configuations. Terraform uses plugins called providers that each define and manage a set of resource types. In this use case, we make use of the Kubernetes provider.

It is recommended to add the bin folder to your environment variable PATH so that you can call both binaries from any location.

Push Grafana image to internal registry

Now, follow the steps to login to OpenShift with the oc CLI. Type oc login and select the OpenShift project you want to work with oc project <project-name>.

Pull the Grafana docker image from Docker Hub to your local Docker instance with

docker pull grafana/grafanaWhen the download is finished, follow the steps to push an image to the internal image registry.

First, we create an empty image stream by

oc create imagestream grafanaNext, tag the local image you wish to push. Set the registry url to OpenShift’s internal registry (in OpenShift, click on the question mark icon left of your username and then click on “About”) and the project placeholder to your project name.

docker tag grafana/grafana <registry>/<project>/grafana:latestNow, push the local image to the internal registry.

docker push <registry>/<project>/grafana:latestWhen the upload is done, you find the image in OpenShift under Builds->Images.

Workflow with Terraform and OpenShift CLI

The following Makefile defines commands to initialise terraform, to apply the Terraform templates, and to to create a external route for Grafana in OpenShift. Of course, you can also call the commands directly in any shell/command line you like.

.PHONY: init

init:

terraform init

terraform get

.PHONY: plan

plan:

terraform plan

.PHONY: apply

apply:

terraform apply

.PHONY: create-route

create-route:

oc apply -f route.jsonLets start by initialising Terraform. make init (or terraform init) initializes various local settings and data that will be used by subsequent commands. The provider plugin binaries are downloaded from the terraform repository to a .terraform/plugins subdirectory of the working directory. In this example, we make use of the Kubernetes provider (provider.kubernetes v1.5.2).

> terraform init

Initializing provider plugins...

- Checking for available provider plugins on https://releases.hashicorp.com...

- Downloading plugin for provider "kubernetes" (1.5.2)...

Terraform has been successfully initialized!Second, we run make plan to execute the plan step of Terraform. This command outputs the execution plan before actually applying it with make apply. The plan command outputs a list of the actions (create, remove, update) and the specific changes it intends to execute. If you have run make apply before, it compares the previous state stored in terraform.tfstate with the current plan.

> terraform plan

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ kubernetes_config_map.grafana-configThird, make apply applies the Terraform templates to the selected OpenShift project. This includes the creation of several resources, such as a service, a pod, a container, and persistent volumes.

Finaly make create-route creates (or updates) an external route to the service. The -f argument signalises that the configuration is picked up from the given json file.

Before you go ahead, replace the variables for the project and application name in the variables.tf file with your settings. Also keep in mind to use these instead of my examples in the following when needed.

Grafana config map

First, we want to create a Kubernetes ConfigMap representing the default configuration of Grafana.

The ConfigMap object provides configuration data to containers in the form of key-value pairs.

The beneath Terraform snippet uses the Kubernetes provider kubernetes_config_map for Terraform. It will create a ConfigMap named “grafana-config” in OpenShift. You can also change the name of the configuration. Just take care that it must be unique within the project.

resource "kubernetes_config_map" "default" {

metadata {

name = "grafana-config"

namespace = "${var.project}"

labels = {

app = "${var.app}"

version = "1.0.0"

}

annotations {

project = "${var.app}"

}

}

data {

grafana.ini = <<EOF

##################### Grafana Configuration Example #####################

...

EOF

}

}The data property contains the actual configuration data for Grafana. As it is rather long, I have not included it here but you can copy it from this Github account. Copy everything from line 7 onwards. All properties are commented out as they have default values. If you want to change something, remove the comment from the respective line.

Open a terminal in the directory where the config map file is located. From there, run terraform apply. If successful, you will get the following output.

> terraform apply

Terraform will perform the following actions:

+ kubernetes_config_map.default

...

metadata.#: "" => "1"

metadata.0.annotations.%: "" => "1"

metadata.0.annotations.project: "" => "grafana"

metadata.0.generation: "" => "<computed>"

metadata.0.labels.%: "" => "2"

metadata.0.labels.app: "" => "grafana"

metadata.0.labels.version: "" => "1.0.0"

metadata.0.name: "" => "grafana-config"

metadata.0.namespace: "" => "grafana-test-project"

metadata.0.resource_version: "" => "<computed>"

metadata.0.self_link: "" => "<computed>"

metadata.0.uid: "" => "<computed>"

kubernetes_config_map.grafana-config: Creation complete after 4s (ID: grafana-test-project/grafana-config)

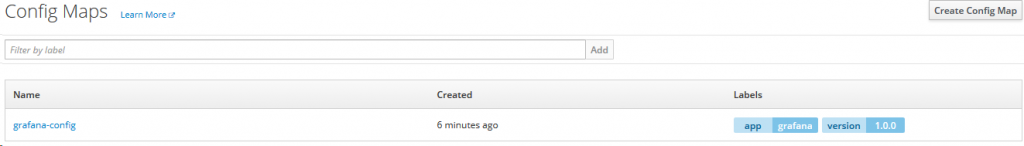

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.As you can see, Terraform created a new config named “grafana-config” in the namespace “grafana-test-project” with one annotation and two labels. To verify that the config map was successfully created, go to OpenShift. Under Resources -> Config Maps you should see a new entry named grafana-config.

Next, we want to create a service which will host our pod running Grafana.

Service definition

A Kubernetes service is an abstraction which defines a set of Pods usually determined by a label selector. To codify this in Terraform, we use the kubernetes_service resource.

As you can see, the label app=”grafana” matches the one from the ConfigMap we just created. The DeloymentConfig named “grafana” is also linked.

resource "kubernetes_service" "default" {

metadata {

name = "${var.app}"

namespace = "${var.project}"

labels {

app = "${var.app}"

}

}

spec {

port {

name = "3000-tcp"

port = "3000"

target_port = "3000"

protocol = "TCP"

}

selector {

deploymentconfig = "grafana"

}

session_affinity = "None"

type = "ClusterIP"

}

}By setting type=”ClusterIP”, the service is exposed on a cluster-internal IP. Thereby, the service is only reachable from within the cluster. The service listens on port 3000 and maps it to port 3000 of the assigned pods.

3000 is the default http port that Grafana listens to if you haven’t configured a different port.

Run terraform apply to create the service.

> terraform apply

...

Terraform will perform the following actions:

+ kubernetes_service.default

id: <computed>

load_balancer_ingress.#: <computed>

...

kubernetes_service.default: Creation complete after 1s (ID: grafana-test-project/grafana)

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.Open Openshift and go to Applications->Services. There, you should see a new entry for our service.

Deployment configuration

The DeploymentConfig is Openshift’s template for deployments. It defines the desired state of the component, that is the deployment strategy, the replica count, volumes, volume mounts, and triggers which cause deployments to be created automatically.

The Terraform provider kubernetes_deployment can help us in this case.

resource "kubernetes_deployment" "default" {

metadata {

name = "${var.app}"

namespace = "${var.project}"

labels {

app = "${var.app}"

deploymentconfig = "${var.app}"

}

}

spec {

selector {

match_labels {

app = "${var.app}"

deploymentconfig = "${var.app}"

}

}

strategy {

type = "Recreate"

}

template {

metadata {

labels {

app = "${var.app}"

deploymentconfig = "${var.app}"

}

}

spec {

container {

image = "grafana/grafana:latest"

name = "grafana"

port {

container_port = "3000"

protocol = "TCP"

}

resources {

limits {

cpu = "300m"

memory = "256Mi"

}

requests {

cpu = "150m"

memory = "256Mi"

}

}

volume_mount {

mount_path = "/var/lib/grafana"

name = "volume-xdtzh"

}

volume_mount {

mount_path = "/etc/grafana/grafana.ini"

name = "volume-et7q2"

sub_path = "grafana.ini"

}

}

volume {

name = "volume-xdtzh"

persistent_volume_claim {

claim_name = "grafana-persistence-volume"

}

}

volume {

name = "volume-et7q2"

config_map {

default_mode = "420"

items {

key = "grafana.ini"

path = "grafana.ini"

}

name = "grafana-config"

}

}

}

}

}

}As usual, the resource defines a metadata and a spec block. The metadata labels and the spec selector match_labels must match (lines 7/8 and 15/16) . There will be only one replica by default. The container is accessible on port 3000 over TCP (lines 37-40).

Furthermore, we allocate CPU and memory resources for the container (lines 42-51). Containers can specify a CPU request and limit. The container is guaranteed the amount of resources requested. Still, pods and containers are not allowed to exceed this limit.

By setting imagePullPolicy: IfNotPresent, OpenShift will only pull the Grafana image if it does not already exist on the node. If the tag is latest, OpenShift defaults ImagePullPolicy to Always. Here, the grafana/grafana image is taken from the interal registry (line 34).

We create a persistent volume for the data in /var/lib/grafana (database and plugins). Also, the path to the Grafana configuration file which is located at /etc/grafana/grafana.ini is mounted (lines 59-63, 74-87) . The volumeMounts subPath property specifies a subpath inside a volume instead of the volume’s root.

> terraform apply

kubernetes_deployment.default: Refreshing state... (ID: grafana-test-project/grafana)

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ kubernetes_deployment.default

...

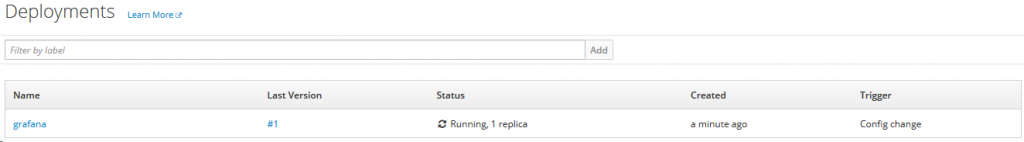

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.Afterwards, several new resources should be created. You can find a new pod under Applications->Pods and a new deployment in Applications->Deployments.

The deployment was successful if both, deployment and pod, show the status as “running”.

Persistent volumes and claims

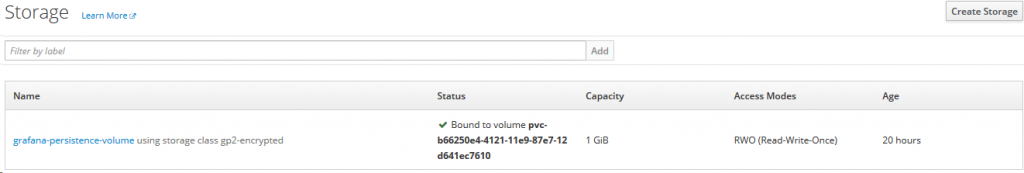

Containers are by nature stateless. This means that whenever the container is recreated or shut down, your data will be lost. The Kubernetes persistent volumes and persistent volume claims give as a solution to this problem. The persistent volume is a specific storage resource. The claim is a request for a specific resource.

To use them, we define what volumes we want to provide and how to mount them in the pod. We have already defined this information in the DeploymentConfig under the “volume_mount” and “volume” entries. What is left is to actually create them. Each claim is paired with a volume.

In OpenShift, click on “Storage” in the sidebar and then on the “Create storage” button. Input name as “grafana-persistence-volume” and click on create.

Additionally, the Github repository provides a volumes.tf file which creates the

kubernetes_persistent_volume and kubernetes_persistent_volume_claim. Do to missing permissions, they are not working in the OpenShift Web Console.

External route

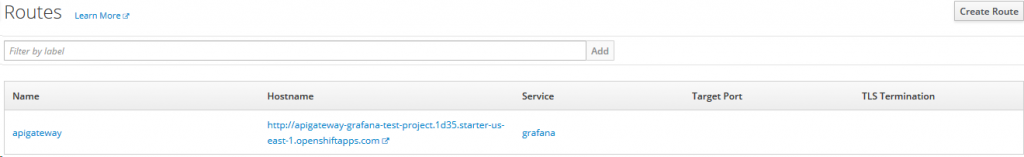

A route is OpenShift’s native resource to expose the service through an URL to the outside world. The Terraform provider for Kubernetes does not have a definition to manage route creation because routes are native to OpenShift .

Therefore, we define the route specification in a json file. Again, namespace is your project and the name is free to choose (for example “apigateway”). The spec is rather simple in this case as I am not using any security measurements (Sticky sessions, sharding, TLS, etc.). The route points to a service named “grafana”

{

"apiVersion": "v1",

"kind": "Route",

"metadata": {

"name": "apigateway",

"namespace": "grafana-test-project",

"annotations": {

"project": "grafana-test-project"

}

},

"spec": {

"to": {

"kind": "Service",

"name": "grafana"

}

}

}To create the route, use

oc apply -f terraform/route.jsonIn OpenShift, go to Applications->Routes and click on the Hostname entry for the newly created route. If everything was successful, it opens a new browser tab and the landing page of Grafana is shown.

You can log in to Grafana using username admin and password admin.